SEARCH

Deepfake: Three threatening scenarios facing users in 2023

SHARE IT

According to the World Economic Forum (WEF), the number of deepfake videos on the internet is growing by around 900% per year. Many cases of deepfake scams have become highly publicised, with reports of harassment, revenge and attempted cryptocurrency scams, etc. Now Kaspersky researchers shed light on the top three scams using deepfakes that you should be aware of.

The use of neural networks and deep learning (hence "deep fake") have allowed users around the world to rely on images, video and audio to create realistic videos of a person in which their face or body has been digitally altered to make them appear to be someone else. These manipulated videos and images are often used for malicious purposes, such as spreading false information.

Financial fraud

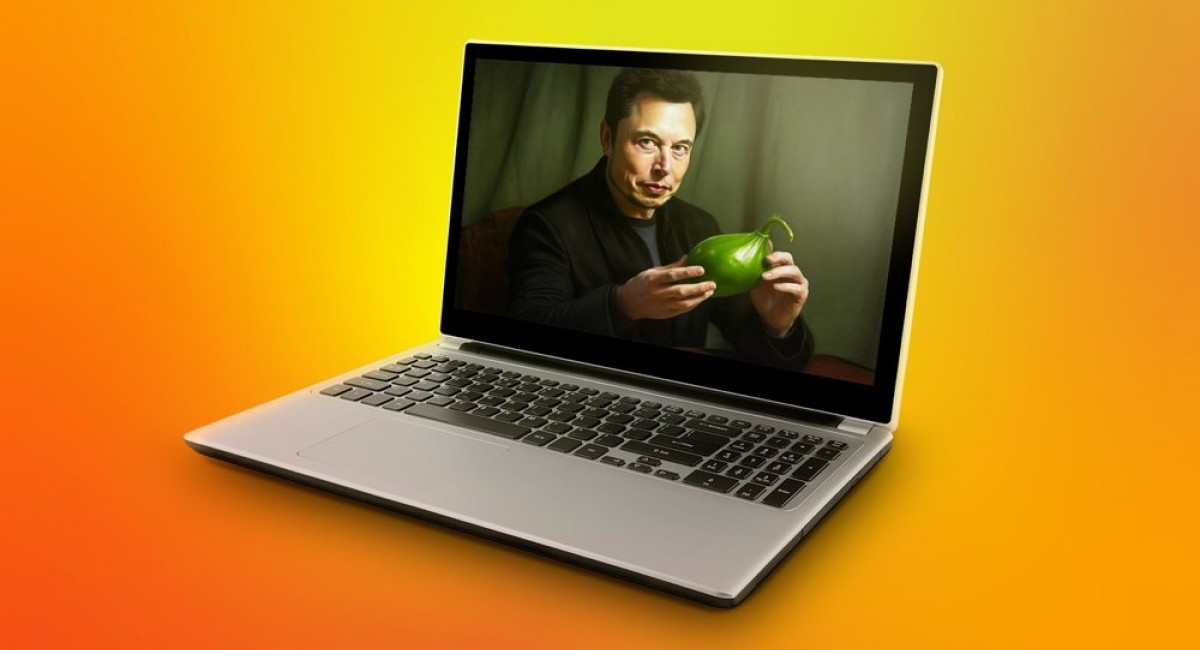

Deepfakes can be used for social engineering, where malicious users use altered images to impersonate celebrities to lure victims into their "trap". For example, a fake video of Elon Musk promising high returns from a precarious cryptocurrency investment scheme went viral last year, causing victims of the scam to lose their money. To create deepfakes like this one, malicious users use celebrity footage or combine old videos and, by live-streaming them on social media platforms, promise to double every cryptocurrency payment sent to them.

Pornographic deepfakes

Another use of deepfakes is to violate a person's privacy. Deepfake videos can be created by adding a person's face to a pornographic video, causing harm and distress. In one case, deepfake videos of certain celebrities appeared on the internet, showing their faces superimposed on the bodies of actors and actresses starring in pornographic films. Consequently, in such cases, the victims of the attack have suffered damage to their reputation and their rights have been violated

Business risk

Often, deepfakes are even used to hurt businesses in ways such as blackmailing company executives and industrial espionage. For example, there is a well-known case where cybercriminals managed to defraud a bank manager in the United Arab Emirates and steal $35 million using a deepfake voice. Just a short recording of the employee's boss's voice was enough to create a convincing deepfake. In another case, scammers tried to trick the largest cryptocurrency platform, Binance. The Binance executive was surprised when he started receiving thank you messages about a Zoom meeting he never attended. It was only by using his online available images that the scammers were able to create a deepfake and use it in an online meeting, speaking instead of the executive.

In general, fraudsters exploiting deepfakes seek to misinform and manipulate public opinion, blackmail or even espionage. According to an FBI warning, human resource managers are already alert to the use of deepfakes by candidates applying for remote work. In the case of Binance, attackers used images of people from the Internet to create deepfakes and were even able to add those people's photos to resumes. If they manage to fool HR managers in this way and later receive an offer, they can steal employer data.

It is also important to keep in mind that deepfakes require high funding since they are a very expensive form of fraud. Previous Kaspersky research revealed the types of deepfakes sold on the darknet and their costs. If an ordinary user finds a simple software on the internet and tries to create a deepfake, the result will be unrealistic. Few people will buy a poor quality deepfake: they will notice delays in facial expression or a blurring of the shape of the chin

Therefore, when cybercriminals prepare such a scam, they need a large amount of data with photos and video clips of the person they want to copy. Different angles, brightness, facial expressions, all play a big role in the final quality. For the result to be realistic, modern computer power and software is essential. All this requires a huge amount of resources and is only available to a small number of cybercriminals. Therefore, despite all the danger that a deepfake can cause, it is still an extremely rare threat and only a small number of buyers will be able to afford it - after all, the price for a minute of a deepfake can start at USD 20,000

The continuous monitoring of money flows on the Dark web gives us valuable information about the deepfake industry, allowing researchers to keep track of the latest trends and activities of threat actors in this space. By monitoring the darknet, researchers can uncover new tools, services and markets used to create and distribute deepfakes. This type of monitoring is a critical component of deepfake research and helps improve our understanding of the evolving threat landscape. Kaspersky's Digital Footprint Intelligence service includes this type of monitoring to help its customers stay ahead of the curve when it comes to deepfake-related threats.

To protect against deepfake-related threats, Kaspersky recommends:

- Audit the cybersecurity practices in place in your organization - not only in the form of software, but also in terms of developed IT skills. Use Kaspersky Threat Intelligence to get ahead of the current threat landscape.

- Strengthen your corporate "human firewall": make sure employees understand what deepfakes are, how they work and the challenges they can pose. Conduct ongoing employee awareness and training to identify a deepfake. The Kaspersky Automated Security Awareness Platform helps employees stay up-to-date on the latest threats and increases digital literacy levels.

- Seek in-depth information from trusted sources. Information illiteracy remains a critical factor that fosters the proliferation of deepfakes.

- Acquire good protocols such as "trust but verify". A cautious attitude towards audio and video messages does not guarantee that people will never be deceived but can help avoid pitfalls.

- Be aware of the key features of deepfake videos to watch out for to avoid falling victim: jerky movements, changes in lighting from one frame to the next, changes in skin tone, strange or no blinking, lips out of sync with speech, digital fingerprints in the image, video deliberately made in lower quality with poor lighting.

MORE NEWS FOR YOU

Help & Support

Help & Support