SEARCH

Meta uses AI to ensure that teens are using Teen Accounts

SHARE IT

Meta is taking new steps to expand its teen safety initiatives across Facebook, Messenger, and Instagram by directly involving parents and leveraging artificial intelligence (AI) to identify and protect underage users. Following the introduction of teen accounts on Instagram last year, the company is now rolling out similar protections for young users on Facebook and Messenger, signaling a broader effort to establish safer digital environments for adolescents.

Direct Parental Involvement Begins

Starting this week, Meta has launched a new outreach campaign targeting parents on Instagram, aiming to guide them in conversations about their children’s online behavior—specifically around age honesty. The move includes sending notifications to parents, educating them on how to verify and correct the age listed on their child’s Instagram profile.

In collaboration with pediatric psychologist Dr. Ann-Louise Lockhart, Meta has developed a set of conversation tips for parents. These suggestions are designed to help them engage with their children about the importance of representing their real age online. By updating their age settings, young users can be automatically transitioned into teen accounts, which offer enhanced protections.

Meta believes this outreach could also empower parents to be more involved and vigilant in monitoring their children’s digital lives. While platforms frequently evolve, leaving many parents unsure about how to supervise their child’s usage, Meta’s initiative offers a practical entry point for families to open up communication about digital safety.

Using AI to Fill the Gaps

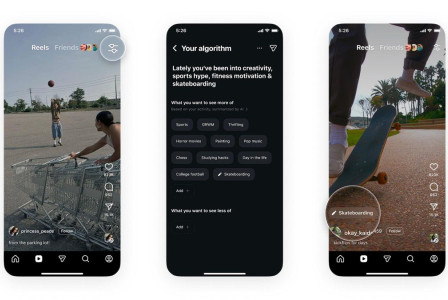

Despite the benefits of direct communication with parents, Meta acknowledges that not all parents will act on the notifications—or even have accounts to receive them. To address these limitations, the company is deploying AI tools, especially in the U.S., to identify users who appear to be underage based on behavioral patterns, even if their account details suggest otherwise.

Meta has been using AI-driven age estimation techniques for some time, but this latest application represents a significant shift in enforcement strategy. The technology will now be used proactively to convert suspected underage users into teen accounts, thereby applying additional safety measures automatically.

In a statement, Meta noted:

“We’ve been using artificial intelligence to determine age for some time, but leveraging it in this way is a big change. We’re taking steps to ensure our technology is accurate and that we are correctly placing teens we identify into protective, age-appropriate settings, but in case we make a mistake, we’re giving people the option to change their settings.”

What Are Teen Accounts?

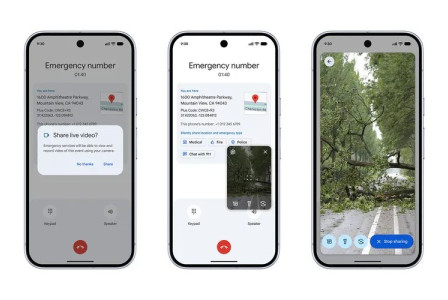

Teen accounts on Meta platforms come with several built-in safeguards that are specifically designed for users under 18. On Instagram, for example, teen profiles are automatically set to private, meaning their posts and stories cannot be viewed unless they accept a follow request. This protects young users from unsolicited attention.

Additionally, direct messaging is heavily restricted. Teens can only receive messages from people they follow or are connected with, reducing the risk of receiving unwanted or inappropriate communications. Other features include time management tools such as screen time reminders and a sleep mode, aimed at promoting healthy digital habits.

These safety enhancements reflect Meta’s broader commitment to child and teen online safety—a topic that has drawn increasing scrutiny from regulators and child advocacy groups in recent years.

Balancing Technology with Responsibility

While the use of AI is promising, Meta emphasizes that it's not a perfect solution. The company is aware of the risks of false identification and is allowing users the ability to appeal and adjust their account settings if incorrectly categorized.

The integration of AI with parental outreach represents a two-pronged strategy: encouraging active parental involvement while using technological solutions to backstop the gaps. The effort seeks to normalize accurate age representation, empower parents, and reduce the risks associated with teen exposure on social media platforms.

As more teens join digital platforms at younger ages, Meta’s evolving approach highlights a trend toward platform accountability and proactive safety design. Whether this combination of parental partnership and algorithmic enforcement becomes the new standard for online youth safety remains to be seen, but Meta appears committed to leading that charge.

MORE NEWS FOR YOU

Help & Support

Help & Support