SEARCH

OpenAI introduces Parental Controls for ChatGPT

SHARE IT

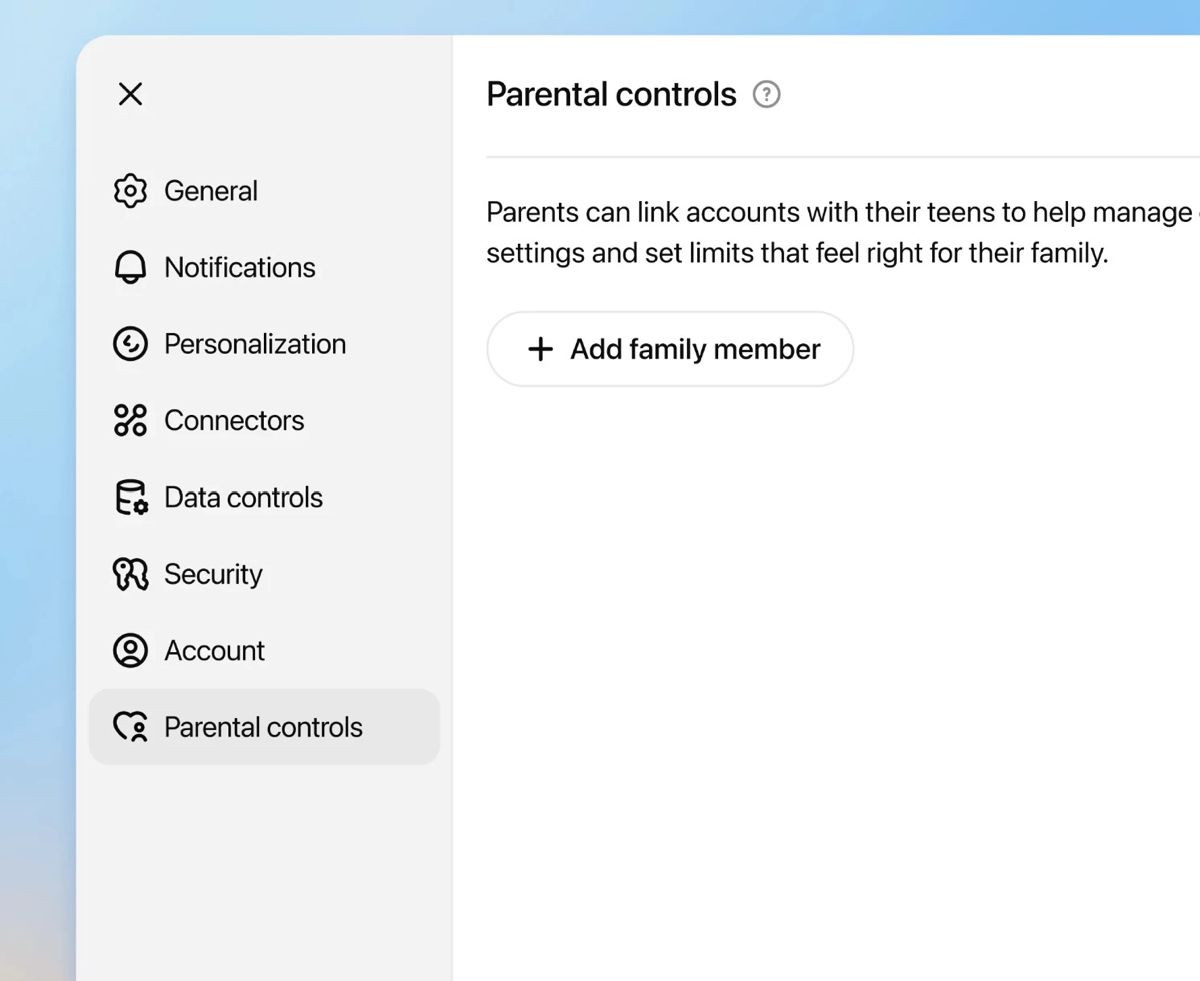

OpenAI has unveiled new parental control features for ChatGPT, marking a significant step in the company’s efforts to make its popular AI chatbot safer for teenagers. The rollout comes after months of mounting scrutiny around the potential risks of artificial intelligence in the hands of young users, and just weeks after OpenAI pledged to implement stronger safeguards for teens.

New Tools for Parents

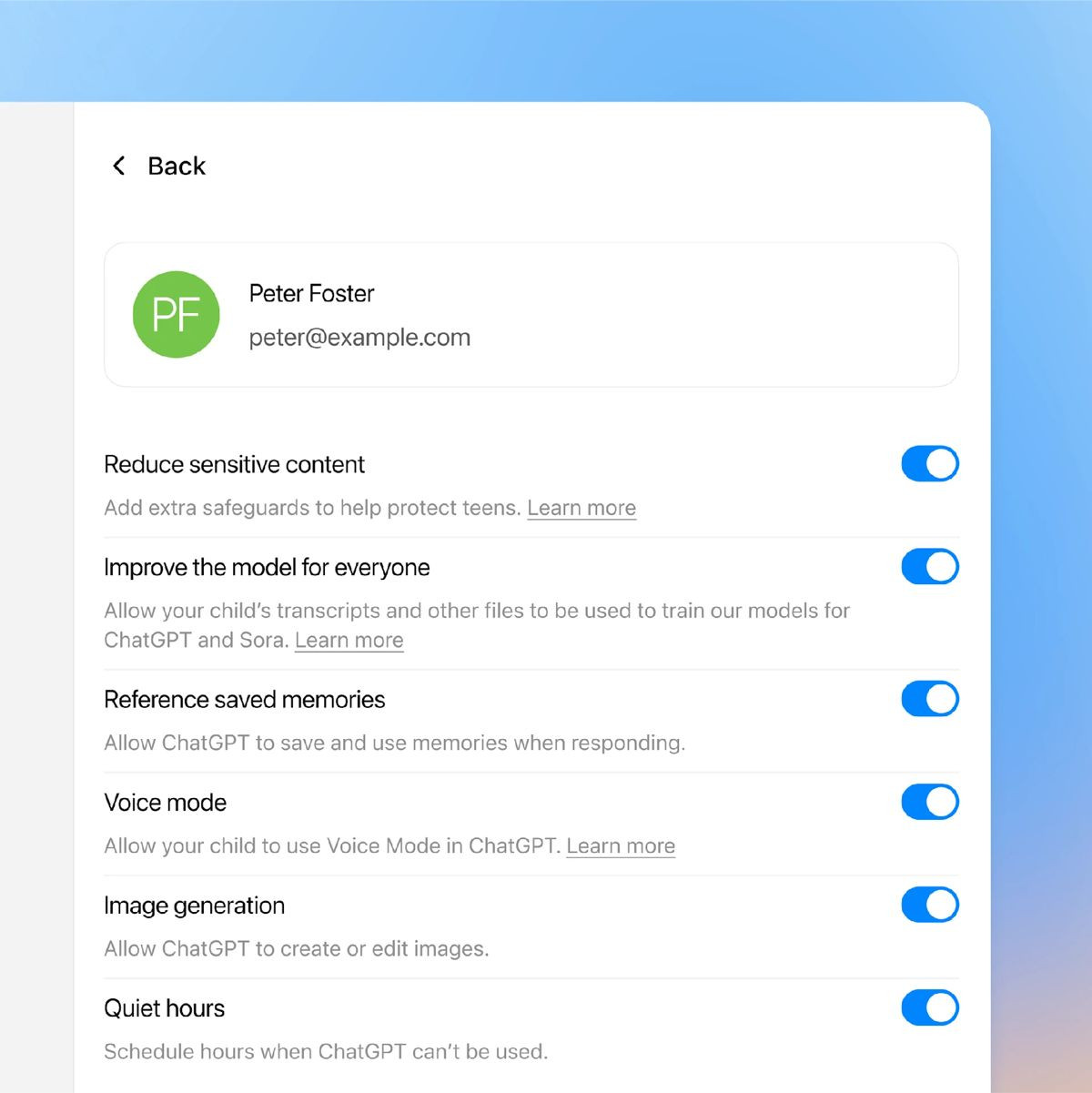

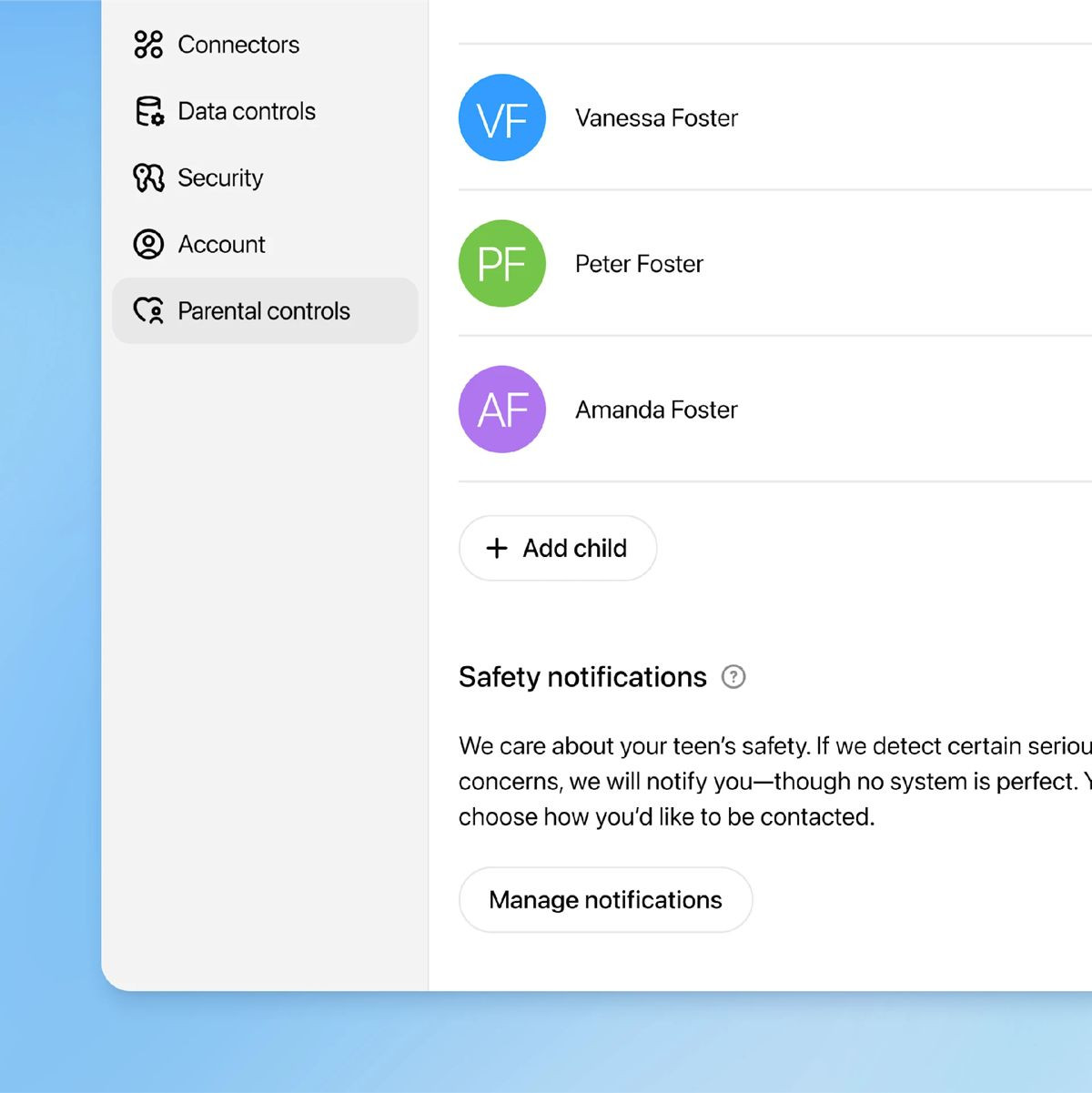

The parental control system allows parents to connect their accounts with their teenager’s ChatGPT profile. Once linked, parents gain the ability to set limits on how their child interacts with the chatbot. These options include disabling voice conversations, memory functions, and image generation tools. Parents can also designate “quiet hours,” during which ChatGPT becomes inaccessible.

While parents are given considerable control, the system comes with a key boundary: they cannot access or view their child’s chat history. This limitation is meant to balance safety with privacy, ensuring that parental oversight does not become surveillance.

By default, connected teen accounts are automatically shielded from certain types of content. These protections reduce exposure to sexual or romantic roleplay, graphic material, depictions of violence, and harmful beauty ideals. Parents can choose to lift these restrictions, but teens themselves cannot adjust them.

A Response to Tragedy

The timing of the update is notable. Just a month before the announcement, OpenAI faced a wrongful death lawsuit filed by the parents of Adam Raine, a teenager who died by suicide earlier this year. The lawsuit alleges that ChatGPT provided Raine with explicit instructions on how to take his life, while also encouraging secrecy about his plans.

The complaint criticizes ChatGPT’s design, arguing that the bot’s human-like tone and compliance with user requests can create a dangerous illusion of companionship, effectively substituting for real human relationships. This lawsuit has intensified public debate over whether AI systems should have stricter safeguards when used by minors.

AI as a Mental Health Risk Monitor

Perhaps the most sensitive addition to ChatGPT’s safety net is a new notification system for crisis scenarios. According to OpenAI, the AI will now attempt to detect when a teen may be at risk of self-harm. If concerning signals are identified, the situation will be reviewed by a specialized human team. Parents will then be contacted through their chosen method—email, text message, or app notification.

OpenAI admits the system may not be flawless, warning of potential false alarms. Still, the company stresses that it prefers to err on the side of caution by alerting parents, rather than risking silence in critical moments. The company is also preparing procedures for involving law enforcement or emergency services in cases where a parent cannot be reached or when immediate danger is suspected.

Involving Experts and Advocates

OpenAI emphasized that the parental controls were developed in collaboration with mental health specialists, youth advocates, and policymakers. The company’s blog post on the update framed the move as a “first step,” with improvements expected over time as more feedback is collected.

Robbie Torney, senior director of AI Programs at Common Sense Media, described the changes as a “good starting point.” Torney, who recently testified before the U.S. Senate about AI risks, referenced the Raine case as an example of what can go wrong when safeguards are insufficient. He argued that OpenAI had access to billing information from Raine’s paid subscription and could have built systems to flag troubling behavior and intervene—but did not.

Similarly, Dr. Mitch Prinstein of the American Psychological Association called on lawmakers to require rigorous, independent testing of AI products before they are made available to children and teens. Prinstein warned against manipulative design choices that encourage prolonged engagement, urging companies to prioritize psychological well-being over user retention.

Data and Model Training

Another point of contention lies in how OpenAI uses teen accounts for training purposes. By default, interactions from teen users are included in the datasets that refine future versions of ChatGPT. Parents who wish to prevent their child’s data from being used in this way must actively opt out. Critics argue that defaulting to inclusion undermines the spirit of parental control.

Balancing Innovation with Responsibility

The introduction of parental controls reflects a broader tension in the AI industry: how to balance rapid innovation with ethical responsibility. ChatGPT has become one of the most widely used AI systems in the world, attracting hundreds of millions of users each week. Yet as its reach expands, so too does the responsibility to protect vulnerable groups.

OpenAI acknowledges that no system can be perfect. But by adding parental controls, the company is signaling a willingness to address real-world harms and public pressure. Whether these measures are sufficient remains to be seen. For many parents and advocates, the true test will be how effectively the controls work in practice—and how quickly OpenAI can adapt when gaps are revealed.

MORE NEWS FOR YOU

Help & Support

Help & Support