SEARCH

Gemini 2.5 Flash focuses on efficiency

SHARE IT

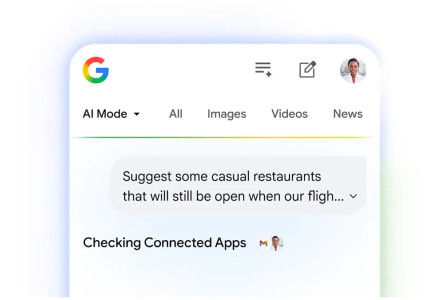

Google is introducing a new AI model that is intended to give high-performance while remaining efficient.

The model, Gemini 2.5 Flash, will soon be available on Vertex AI, Google's AI developer platform. The business claims it provides "dynamic and controllable" computing, allowing developers to change processing time based on query complexity.

You can adjust the speed, precision, and cost balance to meet your specific demands. This flexibility is critical for optimising Flash performance in high-volume, cost-sensitive applications.

Gemini 2.5 Flash debuts as the cost of flagship AI models continues to rise. Lower-cost performant versions, such as 2.5 Flash, offer an appealing alternative to costlier top-of-the-line choices, but at the expense of some accuracy. Gemini 2.5 Flash is a reasoning model like OpenAI's o3-mini and DeepSeek's R1. This means it takes a little longer to answer questions in order to fact-check them.

Google thinks 2.5 Flash is best suited for high-volume, real-time applications such as customer support and document parsing.

“This workhorse model is optimized specifically for low latency and reduced cost,” Google said in its blog post. “It’s the ideal engine for responsive virtual assistants and real-time summarization tools where efficiency at scale is key.”

Google did not release a safety or technical assessment for Gemini 2.5 Flash, making it more difficult to determine where the model succeeds and falls short.

Google also stated on Wednesday that it will deploy Gemini models like as 2.5 Flash to on-premises environments beginning in Q3. The company's Gemini models will be available through GDC, Google's on-premises option for clients with stringent data governance requirements.

MORE NEWS FOR YOU

Help & Support

Help & Support