SEARCH

Google boosts Gemini’s ability to spot AI-made images

SHARE IT

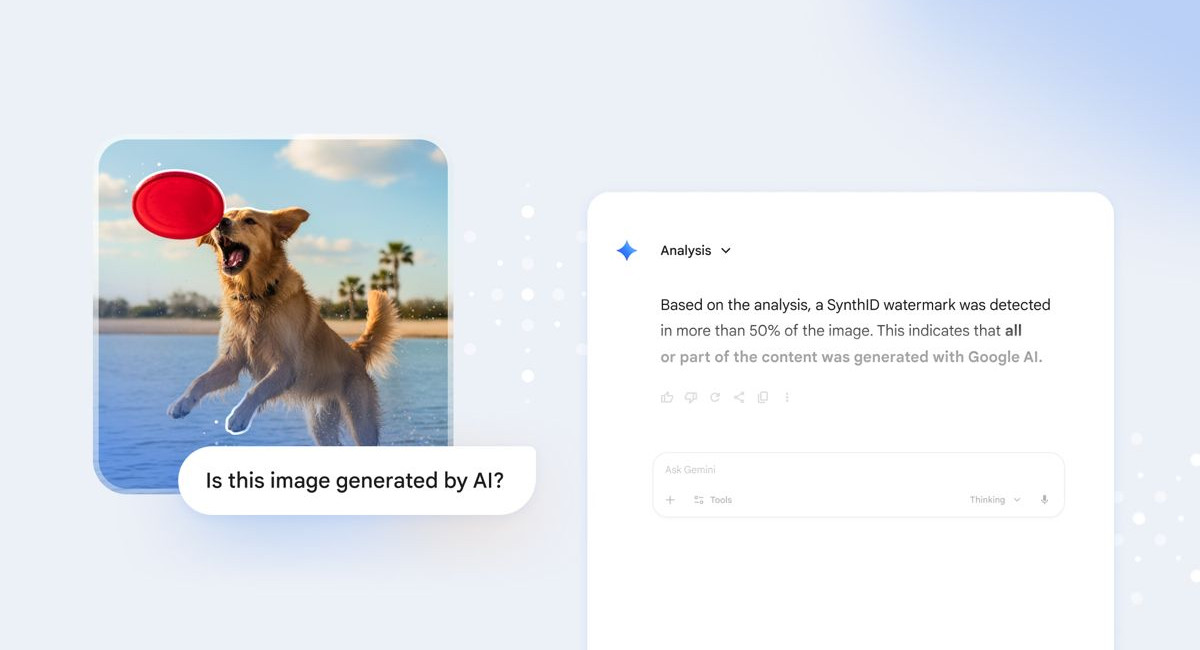

Google is rolling out a new upgrade for Gemini that promises to give users a clearer picture of whether the content they’re viewing has been shaped by artificial intelligence. Anyone using the Gemini app can ask a simple question — “Is this AI-generated?” — to find out if an image was created or altered using Google’s own AI tools. It’s an early but meaningful step in a broader push to make AI-produced media easier to identify as it spreads across the internet.

For now, the feature applies strictly to images. Google says that support for video and audio verification is on the way and that similar tools will eventually appear in other products, including Search. That expansion will matter, especially as AI-generated content grows more sophisticated and harder to distinguish from the real thing. The company’s goal is to make verification accessible wherever people might encounter questionable media, not just inside a single app.

The initial version of the detection system relies entirely on SynthID, Google’s invisible watermarking technology that marks AI-generated images with signals imperceptible to the human eye. When Gemini analyzes an image, it looks for these embedded markers to determine whether a Google model played a part in creating or editing it. It’s a useful foundation, but one with limits — it only works for content made by Google tools.

The next major milestone will come when Google expands Gemini’s verification capabilities to include C2PA credentials. The C2PA standard, backed by a broad coalition of tech and media companies, aims to create an industry-wide system for attaching secure metadata to digital content. This metadata reveals when and how a piece of media was created or modified, enabling verification even when the creator used different AI engines. Once Gemini supports C2PA, it will be able to detect material generated by a much wider range of tools and creative platforms, including models like OpenAI’s Sora.

MORE NEWS FOR YOU

Help & Support

Help & Support