SEARCH

Google redefines AI translation with the launch of TranslateGemma

SHARE IT

The landscape of artificial intelligence is shifting once again as Google introduces its latest innovation in the realm of linguistics. Known as TranslateGemma, this new family of open translation models represents a major leap forward in how machines interpret and convert human language. Built upon the robust foundation of the Gemma 3 open-weight model, this suite is designed to bridge communication gaps with unprecedented efficiency and precision.

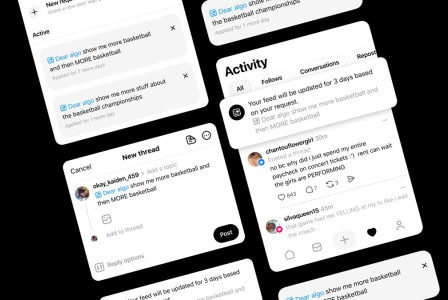

Google’s timing is particularly noteworthy. The unveiling of TranslateGemma occurred just a few hours after OpenAI launched its own competitor, ChatGPT Translate. While the tech industry is no stranger to rapid-fire competition, Google’s approach focuses on the accessibility and versatility of open models. TranslateGemma is capable of processing 55 different languages, ranging from global staples like Spanish and French to linguistically complex ones such as Hindi and Chinese. Unlike traditional systems that often struggle with literalism, these models are engineered to grasp the nuances of human expression.

Technical flexibility is at the heart of this release. Google has made TranslateGemma available in three distinct sizes: 4B, 12B, and 27B parameters. This tiered approach ensures that the technology can be deployed across a wide spectrum of hardware. The 4B version is specifically tailored for mobile devices, bringing high-quality translation to the palm of a user's hand. Meanwhile, the 12B model is optimized for consumer-grade laptops. Remarkably, early benchmarks like WMT24++ show that the 12B model can actually outperform much larger base models, providing developers with high speed and low latency without sacrificing the quality of the output. For those requiring heavy-duty performance, the 27B model offers the highest level of accuracy, though it typically requires cloud-based infrastructure like an NVIDIA H100 to function at its peak.

The secret to TranslateGemma’s performance lies in a sophisticated two-stage training process. Initially, Google utilized Supervised Fine-Tuning, where the base models were fed a combination of human-translated texts and high-quality synthetic data generated by Gemini. This was followed by a Reinforcement Learning phase. During this stage, reward models and advanced metrics such as MetricX-QE were used to fine-tune the AI, guiding it toward more natural, contextually appropriate translations. This rigorous training even allows the models to perform exceptionally well at translating text within images, a capability confirmed by tests on the Vistra benchmark.

By making TranslateGemma available on platforms like Kaggle and Hugging Face, Google is empowering the global developer community to build and experiment. This release isn't just a product launch; it's an invitation to reshape how we interact across borders. As the rivalry between AI giants intensifies, the ultimate winners are the users who gain access to smarter, faster, and more intuitive communication tools.

MORE NEWS FOR YOU

Help & Support

Help & Support