SEARCH

Meta expands Teen Accounts worldwide

SHARE IT

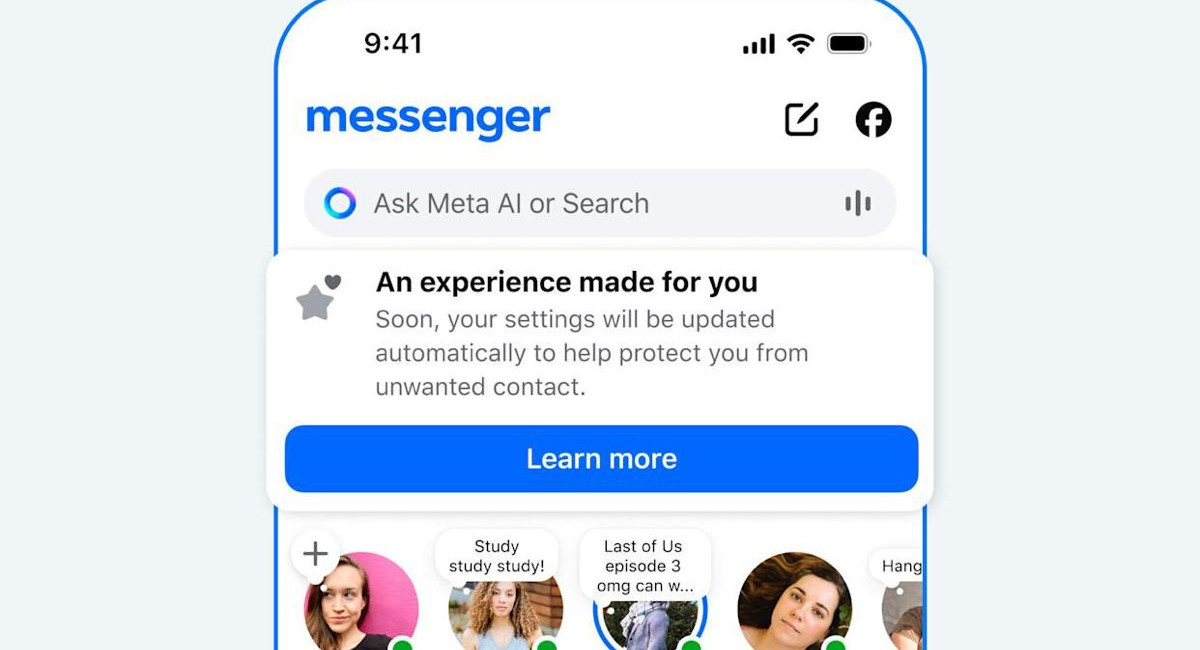

Meta is widening its efforts to protect younger users across its platforms by rolling out dedicated teen accounts for Facebook and Messenger on a global scale. The move comes after a year of testing and gradual introduction, and it represents one of the company’s most visible responses to ongoing criticism of how social media platforms handle the safety of minors.

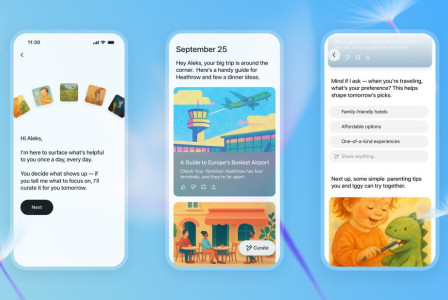

The new accounts, which are already used by millions of teenagers on Instagram, have been designed with parental oversight and stricter safety measures at their core. Meta first introduced the model on Instagram in 2024, before extending it earlier this year to Facebook and Messenger users in the United States, Canada, the United Kingdom, and Australia. With the latest announcement, teen accounts are now becoming a global standard for every underage user.

Meta has confirmed that these accounts are mandatory for all teenagers. Younger teens between the ages of 13 and 15 face an additional layer of oversight, as parental approval is required to adjust any settings related to privacy or safety. The company also relies on artificial intelligence to spot users who may be lying about their age, an issue that has plagued platforms for years. The aim is to make it more difficult for underage users to slip through safeguards and access content or features meant for adults.

What sets teen accounts apart are the tools that allow parents to play a more active role in their children’s digital lives. Parents can view and supervise screen time, keep an eye on who their children are messaging with, and ensure they are not engaging with strangers. The accounts also feature tighter privacy defaults, including restrictions that limit interactions between teenagers and unfamiliar adults. These measures are meant to address long-standing concerns about predators and unwanted contact on social networks.

Instagram, which is owned by Meta, is also expanding a separate initiative focused on combating bullying in schools. Known as the “school partnership program,” it allows middle schools and high schools in the United States to directly report harassment and other problematic behavior to the platform. Originally launched as a pilot with only a small number of institutions, the program has now been opened up to all schools across the country. Meta says it has received positive feedback from educators and administrators who participated in the initial rollout.

The company’s renewed push for safety-focused features comes at a time of heightened scrutiny. Over the last few years, Meta has been under intense pressure from regulators, parents, and child advocacy groups who argue that its apps expose minors to harmful content and interactions. The company is currently facing multiple lawsuits and government investigations into its handling of child safety, accusations that Meta has consistently defended itself against by pointing to ongoing product updates and safety investments.

Still, the rollout of teen accounts may signal a shift in how Meta is trying to balance user growth with public trust. While teenagers remain a vital demographic for the company, they are also among the most vulnerable users. Platforms such as Facebook, Messenger, and Instagram have long struggled with the perception that they are unsafe spaces for children, particularly as reports of cyberbullying, grooming, and mental health impacts have grown louder.

MORE NEWS FOR YOU

Help & Support

Help & Support