SEARCH

Now you can have normal conversations with Google's AI Mode in real time

SHARE IT

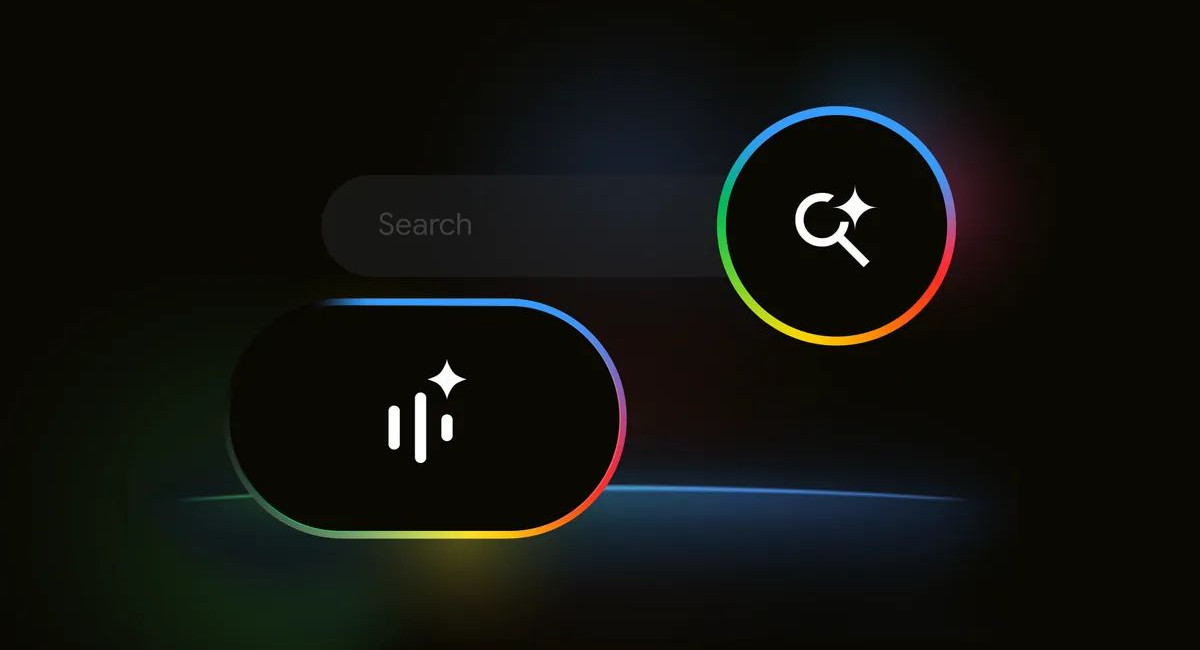

Google is currently trialing a new feature that allows users to engage in real-time voice conversations with its AI chatbot directly through the search engine. Dubbed Search Live, the feature is now being rolled out in experimental mode to users in the United States who are enrolled in Google Labs. While still in testing, this addition marks a key step in Google’s broader ambition to transform the way people interact with Search.

Integrated into AI Mode, the company’s recently launched AI-powered search experience, Search Live enables users to ask questions out loud and receive spoken responses in return. The interaction is designed to feel conversational, with users able to ask follow-up questions naturally, much like speaking with a human assistant. During the exchange, relevant links are displayed on-screen, helping users explore deeper insights if they wish to continue browsing.

To access Search Live, users must first opt into the AI Mode experiment via Google Labs. From there, they can open the Google app on either Android or iOS and tap on the new “Live” icon. A simple voice query such as “How can I keep a linen dress from wrinkling in a suitcase?” will prompt the AI to provide an audio response. If the user wants to dig further, they might ask “What can I do if it still wrinkles?” and receive a follow-up answer, making the interaction both practical and dynamic.

Although the current version of Search Live does not yet support visual input, Google has plans to integrate camera-based capabilities in the coming months. This would allow users to point their phone’s camera at an object and ask contextually relevant questions out loud—an advancement already available in the standalone Gemini app, where Search Live first made its debut.

This move is part of Google’s ongoing efforts to reshape Search into a more interactive and multimodal tool. In parallel, the company is also experimenting with features such as Audio Overviews, which aim to deliver podcast-like summaries within search results. These developments reflect a wider trend across the tech industry to incorporate more human-like, voice-driven interfaces into AI services.

Competitors have been active in this space as well. OpenAI introduced an Advanced Voice Mode for ChatGPT last year, offering similar back-and-forth interactions. Anthropic followed suit with a voice mode for its Claude app this past May. Meanwhile, Apple is working on integrating large language model capabilities into Siri, though rollout has reportedly been delayed due to challenges in achieving reliable performance.

One notable advantage of Search Live is its background functionality. Users can switch between apps while continuing their voice conversation with the AI, enhancing multitasking. Additionally, the option to view transcripts of the AI’s responses and continue the interaction via text adds flexibility. Previous conversations are also saved in AI Mode history, allowing users to revisit them at any time.

In sum, Search Live signals a major step forward in Google’s efforts to make its search engine not just a tool for information, but a personalized and interactive assistant accessible through natural voice interactions.

MORE NEWS FOR YOU

Help & Support

Help & Support