SEARCH

Opera introduces local LLMs to its Opera One browser

SHARE IT

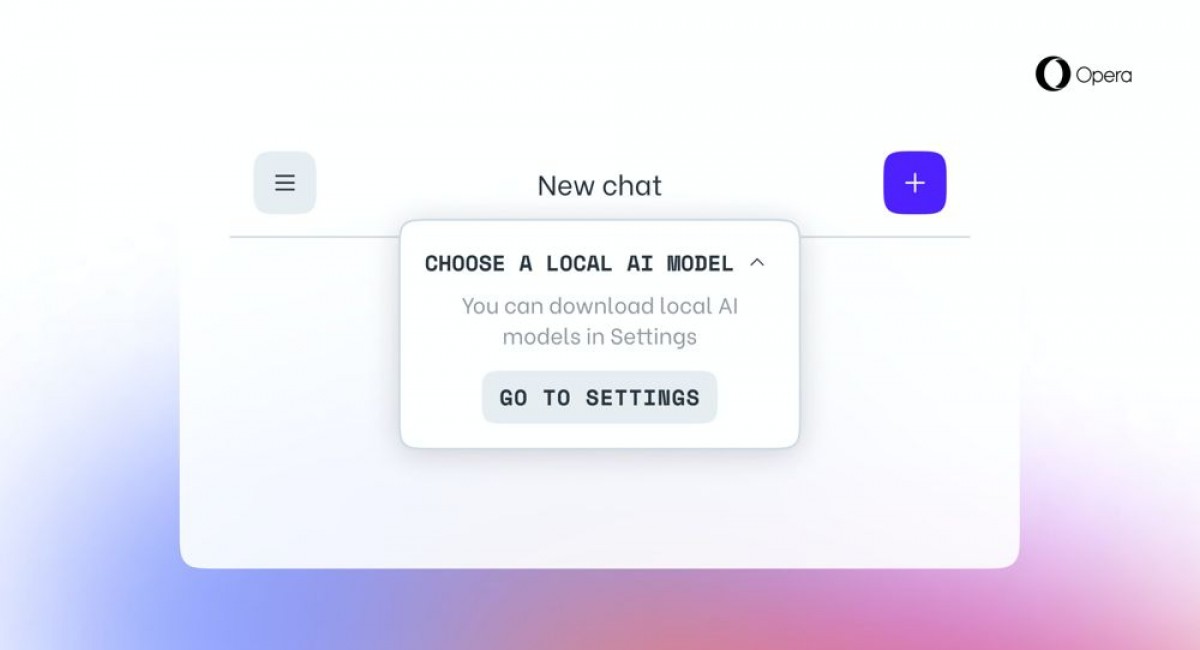

Opera officially announced that it will introduce experimental support for 150 local LLM (Large Language Model) variations from about 50 model families to its Opera One browser in the developer stream. This move represents the first time that local LLMs may be readily accessed and maintained from a major browser using a built-in functionality. The local AI models complement Opera's online Aria AI service.

Among the supported local LLMs are:

- Llama from Meta

- Vicuna

- Gemma from Google

- Mixtral from Mistral AI

- And many families more

Using Local Large Language Models implies that users' data is stored locally on their device, allowing them to use generative AI without having to transfer it to a server. Opera One's AI Feature Drops Program enables early adopters to try experimental versions of the browser's AI features, including new local LLMs in the developer stream.

As of today, Opera One Developer users may choose which model to process their input with. To test the models, upgrade to the latest version of Opera Developer and enable the new functionality. After selecting a local LLM, it will be downloaded to their system. The local LLM, which normally takes 2-10 GB of local storage space per variation, will then replace Aria, Opera's default browser AI, until the user initiates a new dialog with the AI or turns Aria back on.

In early 2023, Opera introduced Opera One, their AI-centric flagship browser based on Modular Design principles and a new browser architecture with a multithreaded compositor that enables for smoother-than-ever UX element processing. Opera One includes the Aria browser AI, which may be accessed either the browser sidebar or the browser command line. Aria is also accessible in the gamer-focused Opera GX, as well as the Opera browser for iOS and Android.

MORE NEWS FOR YOU

Help & Support

Help & Support