SEARCH

YouTube double down strategy tackling AI Slop while empowering digital twins

SHARE IT

The digital landscape is currently witnessing a complex tug-of-war between the rise of synthetic content and the preservation of platform integrity. YouTube, the world’s largest video-sharing ecosystem, has found itself at the heart of this evolution. In a recent strategic update, the company’s leadership outlined a dual-track approach: an aggressive crackdown on what is being called AI slop, contrasted with the rollout of sophisticated new tools that allow creators to generate AI versions of themselves.

Neal Mohan, the CEO of YouTube, addressed these challenges in his latest annual letter. He acknowledged the growing frustration among users regarding low-quality, repetitive, and spam-heavy AI-generated content. This phenomenon, increasingly labeled as AI slop, threatens to clutter feeds and diminish the user experience. To counter this, Mohan emphasized that YouTube is doubling down on its detection systems. The goal is to filter out the noise and ensure that the platform remains a space for meaningful engagement rather than a warehouse for algorithmic spam.

Interestingly, Mohan drew a parallel between the current skepticism surrounding AI and the early days of niche content categories like ASMR or gaming walkthroughs. He suggested that while new formats often face initial resistance, they eventually find their place in the mainstream. This perspective indicates that YouTube does not intend to ban AI content outright but rather to refine its moderation to distinguish between creative innovation and mindless automation. The platform’s philosophy appears to be rooted in maintaining a balance between free expression and a high-quality viewer experience.

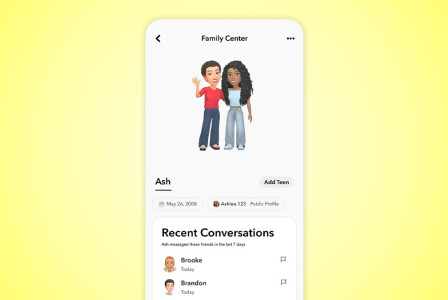

While the company moves to clean up the platform, it is simultaneously handing more powerful AI tools to its creative community. One of the most talked-about announcements is a new feature for Shorts that enables creators to generate content using an AI-backed digital likeness of themselves. This effectively allows for the creation of authorized deepfakes, where a creator can appear in a video without having to physically film every scene. This move is designed to streamline production and offer new ways for influencers to scale their output.

However, this technological leap introduces significant questions regarding security and authenticity. Just last October, YouTube introduced a likeness-detection system intended to protect individuals from unauthorized deepfakes. The coexistence of a tool that creates deepfakes and a system that detects them creates a delicate technical challenge. YouTube will need to ensure that its internal filters can distinguish between a creator using their own AI twin and a malicious actor attempting to impersonate them.

The scale of AI adoption on the platform is already staggering. According to company data, over one million channels utilized YouTube’s AI-powered creation tools on a daily basis during the final month of 2024. Looking ahead to 2026, the platform plans to expand these capabilities even further. Upcoming features are expected to allow creators to build interactive games through simple text prompts and to experiment with AI-driven music composition, further blurring the lines between traditional video and interactive media.

This trend of promoting high standards while launching generative tools is not unique to YouTube. Industry leaders, including Microsoft CEO Satya Nadella, have recently argued that for AI to avoid becoming a speculative bubble, it must deliver tangible, real-world value. The challenge for YouTube lies in the execution. As the debate over AI slop continues to evolve, the platform is attempting to find a middle ground. The success of this strategy will depend on whether the public eventually accepts AI-assisted production as a legitimate form of creativity or continues to view it with suspicion

MORE NEWS FOR YOU

Help & Support

Help & Support